Bryan Johnson is a fool. He also just so happens to be, to me, one of the most interesting fools in recent history.

If you’re graciously unfamiliar with the former Braintree founder and chairman turned messianic health guru, I’ll provide a brief synopsis of what’s relevant to today’s conversation. There’s no shortage of longer profiles and thinkpieces out there if you’d like to dive deeper.1

Johnson claims to be “the most biologically measured person in history”, successfully “aging in reverse” as a result of his ascetic lifestyle, monomaniacal focus on health, and millions of dollars spent annually on various interventions.

He takes over a hundred supplements and medications every day. He flies abroad for experimental medical procedures like gene therapies and stem cell treatments not yet approved in the US. He deems eating dessert (or any health-negative habit, no matter how small) “an act of violence”.

He is dedicated, no doubt. There is the slight problem of his two ulterior motives2:

Building a slightly-alarming cult of personality around himself

Selling a variety of supplements with dubious marketing claims

Also, he goes about his personal pursuit of health goals in just about the dumbest way possible.

He tries everything all at once, at scale - hundreds of interventions with no regard to causality, confounding, or even estimating the impact of any given intervention.3 He is equally happy to source interventions from either established medical consensus or new age health quackery (like special “grounding” bedsheets that plug in to a wall outlet to “connect you to the earth’s subtle electromagnetic charge”, like our ancestors were, or whatever). And he measures his outcomes with a wide range of nonsense metrics - many of them seemingly invented by his former doctor Oliver Zolman, like measuring the “biological age” of his skin pores.

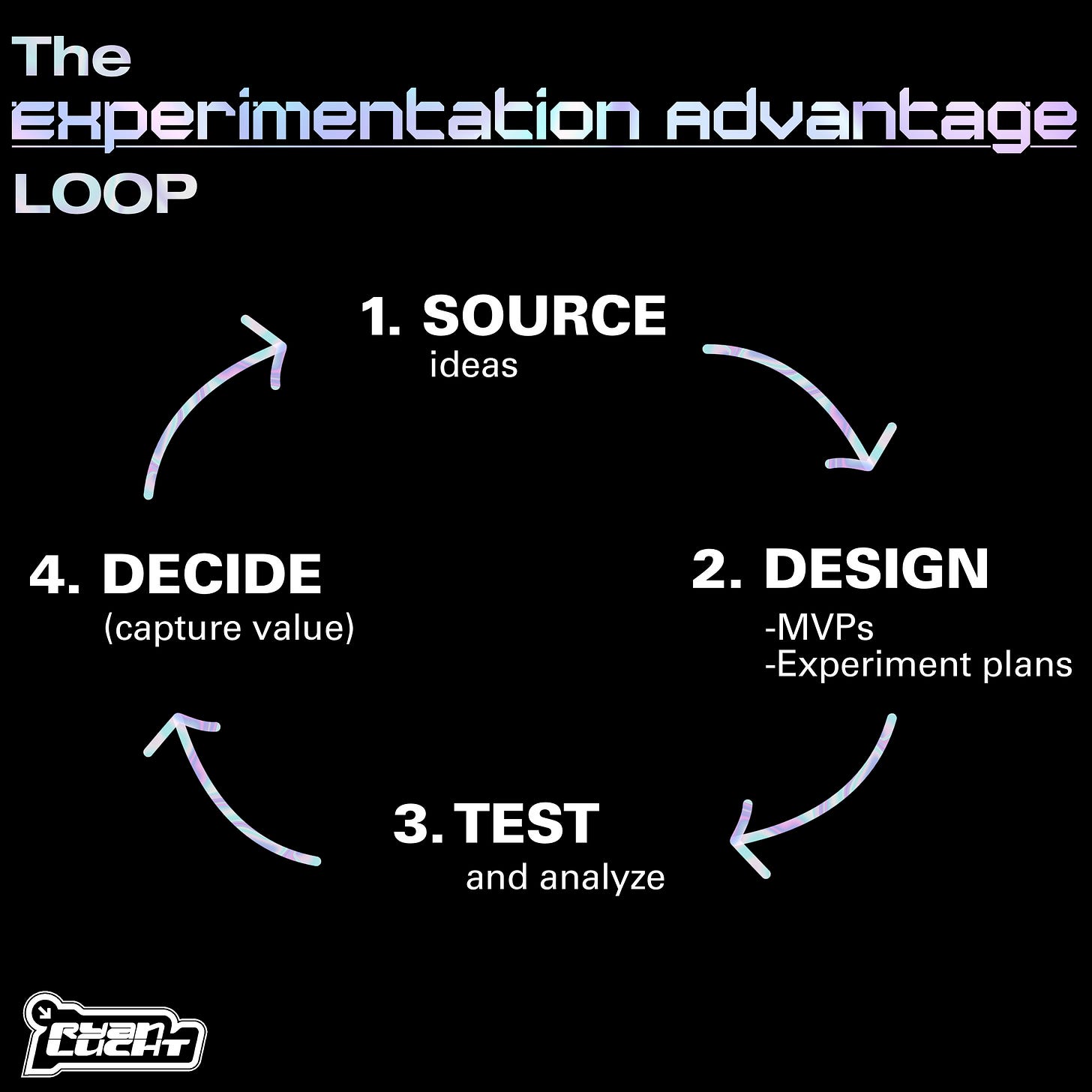

In other words, he has no Experimentation Advantage. No structured way to learn, ergo no flywheel effect. He is a classic fool of randomness.4

I am smarter than Bryan Johnson.

Unless, of course, you want to consider his net worth (a centi-millionaire), millions of followers, and actual health outcomes as evidence to the contrary. In which case he is far smarter than me. But I also give myself far lower odds of my life totally imploding5 and am not facing numerous allegations of illegal employment practices and generally distasteful behavior. Plus he’s got like a decade+ on me - I’m just getting started, baby.

If I separate the art from the artist, so to speak, there’s something that I find messily inspiring about Johnson’s original ideas though.

His original “first principle” in creating Blueprint, the name he gives both his protocol and supplement company, was to “remove himself” from the decision-making about his health and create a data-driven feedback loop:

My team and I had endeavored to do what no one had ever done: enable a body to run itself. Practically, I do this by routinely taking hundreds of measurements of my body’s biological processes, enabling my heart, liver, lungs and kidneys to speak for themselves what they need to be in their ideal state.

Aspire to look and feel your very best? Opt into a system where your body + science and data do the work. It’s akin to saying yes to the internet, computers and AI to improve our lives in ways that exceed our natural abilities.

This Automation - and the elimination of self destructive behaviors - allows us to improve at the rate at which you can automate important functions without thinking about them - in this case, your health. Improve at the speed of compounded gains…

(from his original landing page for Blueprint, full of philosophical writing that ranges from nonsense to genuinely novel - far more interesting than the supplement-shilling drivel he has had to reduce his message into as he commercializes further)

Obviously this is not what Johnson has built. But I couldn’t help but wonder what my own version of that might look like…

I don’t have hundreds of millions of dollars, or nearly as much free time on my hands. But I do understand how to actually leverage an Experimentation Advantage. So earlier this year, I started to tinker with the idea. I’d like to start sharing some of what I’m learning in a new series for this newsletter that I’m calling the Personal Lab.

Let's look at how a more intentional approach to the messy business of personal health optimization might go, focusing on the following areas:

Building a hierarchy of evidence for decision-making

Measuring the right metrics to quantify my health

Selecting the right potential interventions for testable hypotheses

A More Personal Hierarchy of Evidence

The interesting thing about health is how heterogenous individual outcomes can be from any given treatment. Whether due to genetic variation, environmental variables, or any number of other confounders, what works for many may not work for me. In other words, even following the most rigorous RCT and meta-analysis evidence, I may find many personal “false positives”. E.g. “first line” medications for many conditions have high non-response rates. (Perhaps the most well-known examples lie in the mental health field, where SSRIs prescribed for depression only work about half of the time6, but this is also true of things like statin therapy for lowering cholesterol levels, where 15-20% of patients appear to be statin resistant.7)

On the other hand, if I never try things for myself, I’ll likely miss “false negative errors” too: medical journals publish peer-reviewed Case Reports with a keen eye towards documenting unexpected outcomes, surprising associations, or novel therapies observed in single cases.8

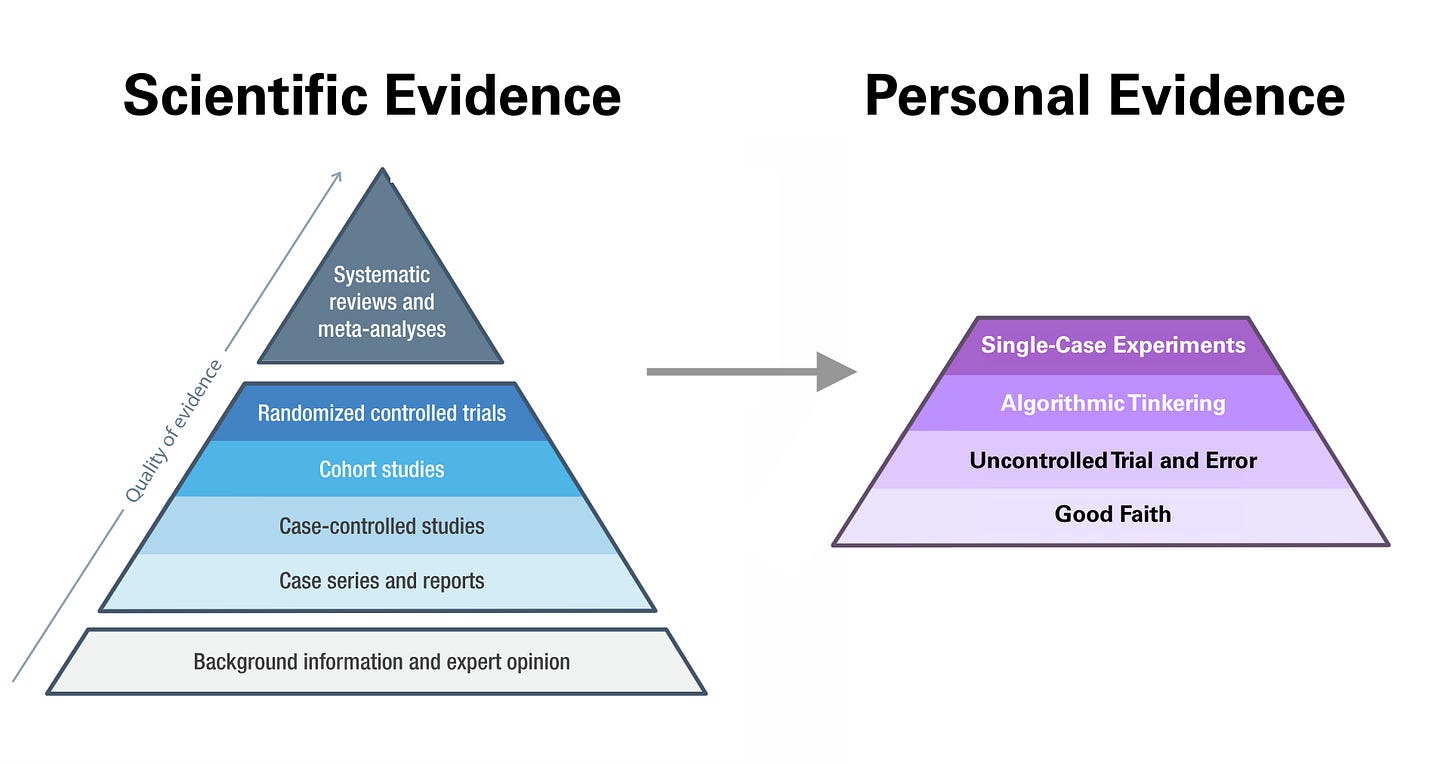

So while published RCTs and meta-analyses are certainly the gold standard of scientific evidence, finding applicable insights and making personal health decisions requires a second, parallel “hierarchy of evidence” - one that gives otherwise undue priority to data I’m able to collect about myself.

Here’s how I might construct such a hierarchy of evidence:

Of course, the quality of scientific evidence about the idea/intervention gives me a sort of prior to consider when deciding what “personal evidence” I’ll demand. I am very happy to take it on “good faith” that getting my Vitamin D is important and make the small investment in a supplement to address my deficiency. The grounding bedsheets, on the other hand, would be subjected to extreme scrutiny via experiment, assuming I ever felt the urge to try them.

To introduce what I mean by some of these terms, let’s take nutrition and diet as an example. What would be the highest-quality evidence available to help me determine how many total calories to eat, what amounts of each macronutrient I should aim for, and what foods I should eat to get there?

Immediately we get into trouble - how do I know if I should be gaining weight, losing weight, or just maintaining my current bodyweight? We’ll have to put this discussion aside for the later section on metrics, so for now let’s jump to my conclusion that I’d like to both gain muscle and lose fat, but I have a lot more progress to make on the former. (I’ll share how I came to this conclusion in a bit.)

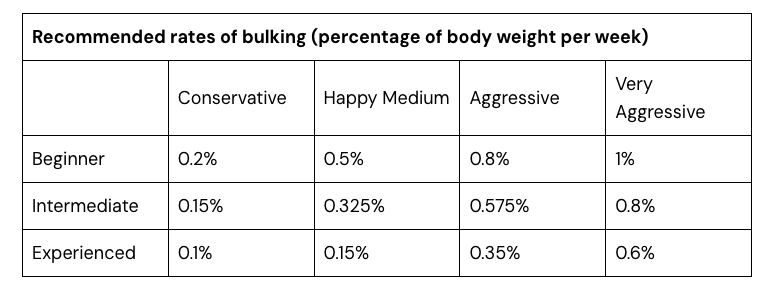

The next decision I face is deciding on the rate of weight gain I’d like to pursue, since this will determine my daily caloric intake. The trick is that the faster you go, the worse your ratio of muscle gained:fat gained will likely become. Luckily, here we have a nice array of published RCTs and quasi-experiments that can guide my decision making. Exercise scientist Greg Nuckols summarizes their findings in a neat little table in the article linked above:

Wonderful! I set my goal fairly conservatively at a 0.2% gain in bodyweight every week, and hope that the conservatism might help me “recomp” (simultaneously gain muscle and lose fat) a bit along the way.9

Now we need to determine how many calories I expend in a day to calculate the right caloric surplus to eat every day in order to reach that 0.2% gain. Here we’re back into tricky territory. A quick ask of Perplexity Deep Research suggests that there are over 20 proposed equations for calculating one’s Total Daily Energy Expenditure, each derived from studying different populations, each with relatively low accuracy rates. (Nearly all of them regularly underestimate Resting Metabolic Rate by 10% or more)

I could use the output of an online calculator based on one of these equations as a starting point, and just adjust over time. I’d start at 2500kcal/day, see how my weight has changed after a week or so, and adjust upwards or downwards as needed. This would constitute what I’m calling uncontrolled trial and error.

This is a good evolution beyond just taking some expert opinion on how much I should eat, since it starts to account for my individual variance. The problem is that it’s a rather sloppy feedback loop: weight data from the scale can be notoriously noisy day-to-day, and what happens if I have a bad day or two and only eat 2000 calories? Do I discard a week of data?

Instead, I can use the app Macrofactor to approach this with algorithmic tinkering. By logging both a daily weigh-in and the food I eat every day, Macrofactor is able to continuously update an algorithmic estimation of my energy expenditure, and use that to regularly update its nutrition guidance to me. This is a big leap in precision and accuracy compared to the uncontrolled trial and error approach. The feedback loop is tighter and contains fewer opportunities to fool myself.

Now we come to determining our macronutrient profile - if I should eat 2,632 calories a day (my current guidance from Macrofactor), how much of that should be protein vs. carbohydrates vs. fats?

Adequate protein intake is most important, especially since my goal is to gain muscle. Here we have a fun case of many published meta-analyses trying to uncover “optimal”, but several don’t agree with each other. Greg Nuckols again has a fantastic article that’s an approachable review of the literature. It’s long, but readers of this newsletter should love it: Nuckols even gets to hit on topics like Simpson’s Paradox and the Cult of Stat Sig in the process.

The one type of evidence that we haven’t introduced yet is the single-case experimental design. I am slightly misappropriating the term here: there is a such thing as a SCED worthy of publication, following clear guidelines that include blinding of therapists and patients along with other numerous other design criteria to reduce the risk of bias and improve hopes of generalizability/external validity. I will not be doing that. There will be no blinding, since I alone am the triune patient, practitioner, and evaluator.

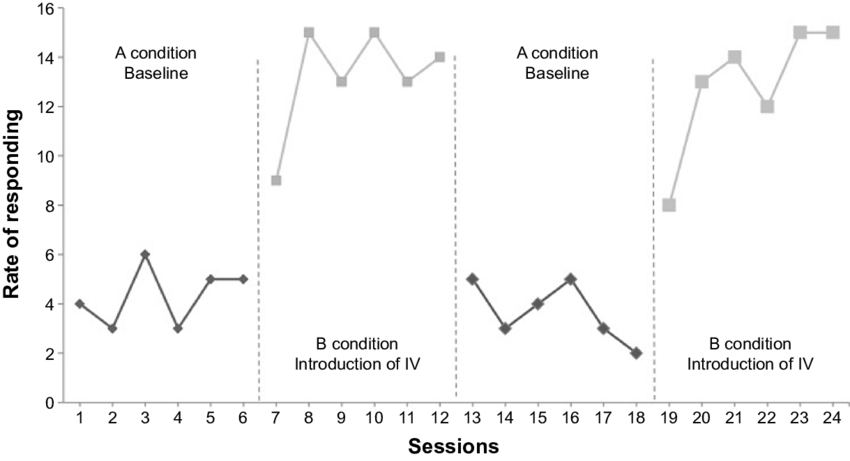

But single-case experiment designs, namely withdrawal designs (e.g. ABAB, like a kind of crude switchback test) do offer me a worthwhile tool to apply statistical analysis to my tinkering and avoid fooling myself - especially important when evaluating extraordinary claims.. or expensive interventions!

ABAB designs give us three opportunities to observe the potential effect of the treatment: the first switch from A → B, the withdrawal from B → A′, and the return from A′ → B′.

Maybe in my quest to determine a macronutrient profile, I could conduct a multi-month ABAB test around eating a ketogenic diet. If you’ve been living under a rock for the past 10 years, ketogenic (high-fat, ultra-low-carb) diets were quite the rage for a minute there around 2015-2020 (the hype largely fueled by a web of experts and influencers that Tim Ferriss helped promote). Ketogenic diets have been touted for weight loss and cardiovascular health benefits, established as having some efficacy for treating various neurological disorders10, and studied as a supplemental therapy for cancer treatment11.

However, they aren’t universally safe. Over the last few years, extreme cases and meta-analyses have suggested heterogeneous outcomes where some patients see moderate to extreme elevations of LDL cholesterol when eating ketogenic diets.

If I were interested in the potential health benefits of a ketogenic diet, I could run a withdrawal experiment where I alternated between a standard/balanced diet and a ketogenic one, getting a lipid panel done towards the end of each phase to measure any potential adverse impact.

(In reality, nutrition is maybe not the easiest field for me to come up with an example use for single-case experiment designs. It may be far easier to imagine me running an ABAB experiment on my sleep, buying some ridiculously-expensive gadget like the Eight Sleep temperature-control mattress, and enabling/disabling it over a few phases before analyzing sleep data collected by my Oura ring. An exploration for another time.)

But putting aside the hypothetical, for now, I’m just eating a fairly balanced diet. Shooting for close to 1g/day of protein, trying to keep fat intake <30% of my total daily calories, and filling out the rest with carbohydrates. The source of my protein target was discussed above, but where did I get that fat intake guideline from? As best as I can tell, just evidence-driven expert opinion. I picked up a copy of Eric Helms’ “Muscle and Strength Pyramid: Nutrition” book, and this fat intake was a guideline for weight-gain phases that didn’t contain a direct citation. (The book is, otherwise, incredibly thorough in its references to the literature)

Desperate for Metrics

Now that we’re explored a few of the methodologies available to us, we need to circle back to the tricky consideration of choosing metrics.

How did I come to the conclusion that my nutrition goals should be focused on fueling muscle gain and fat loss? What metrics did I assess?

Obviously the one metric we’ve got going for us here is that it’s cheap, easy, and reasonably accurate to take your own bodyweight at home - assuming you take your weight measurement in some consistent conditions12

Interpreting what the scale tells me is another question. I could calculate my Body Mass Index, which is so crude a measure to be almost completely useless for actioning or predicting individual health. But I want to know more granularly how much of my bodyweight is lean muscle, and how much is fat. My so-called “smart” scale displays estimates of these numbers via bioelectrical impedance, but this method is so inaccurate and noisy that it too is essentially useless - one study found errors >10% in measurements taken with such a scale for 69% of subjects.

The internet tells me that DEXA scans (Dual-energy X-ray Absorptiometry) provide a gold-standard measurement of bone mineral density13, with some have extrapolated to mean that it also provides a “gold standard” measurement of body composition. This isn’t quite the case though - some research has found absolute errors between DEXA scans as large as +/- 5% bodyfat (an eyeball-able magnitude of difference), leading Eric Trexler to doubt if they’re worthwhile for this purpose.

I decided to operate under the assumption that it was better than nothing, and figured it was an interesting way to spend an hour on a Saturday morning anyways. DEXA scans offer two numbers in particular that might help provide helpful input for my body comp goal-setting: Appendicular Lean Mass Index, and Visceral Adipose Tissue (adipose = fat). Interpreting those numbers is a whole other consideration though. There is no clinical guidance per say on what those numbers should be. For this I had to turn to material from health influencer and longevity doc Peter Attia, who wants to see his patients above the 75th percentile in ALMI for their gender and age, and below the 20th percentile for VAT (10th percentile is even better).

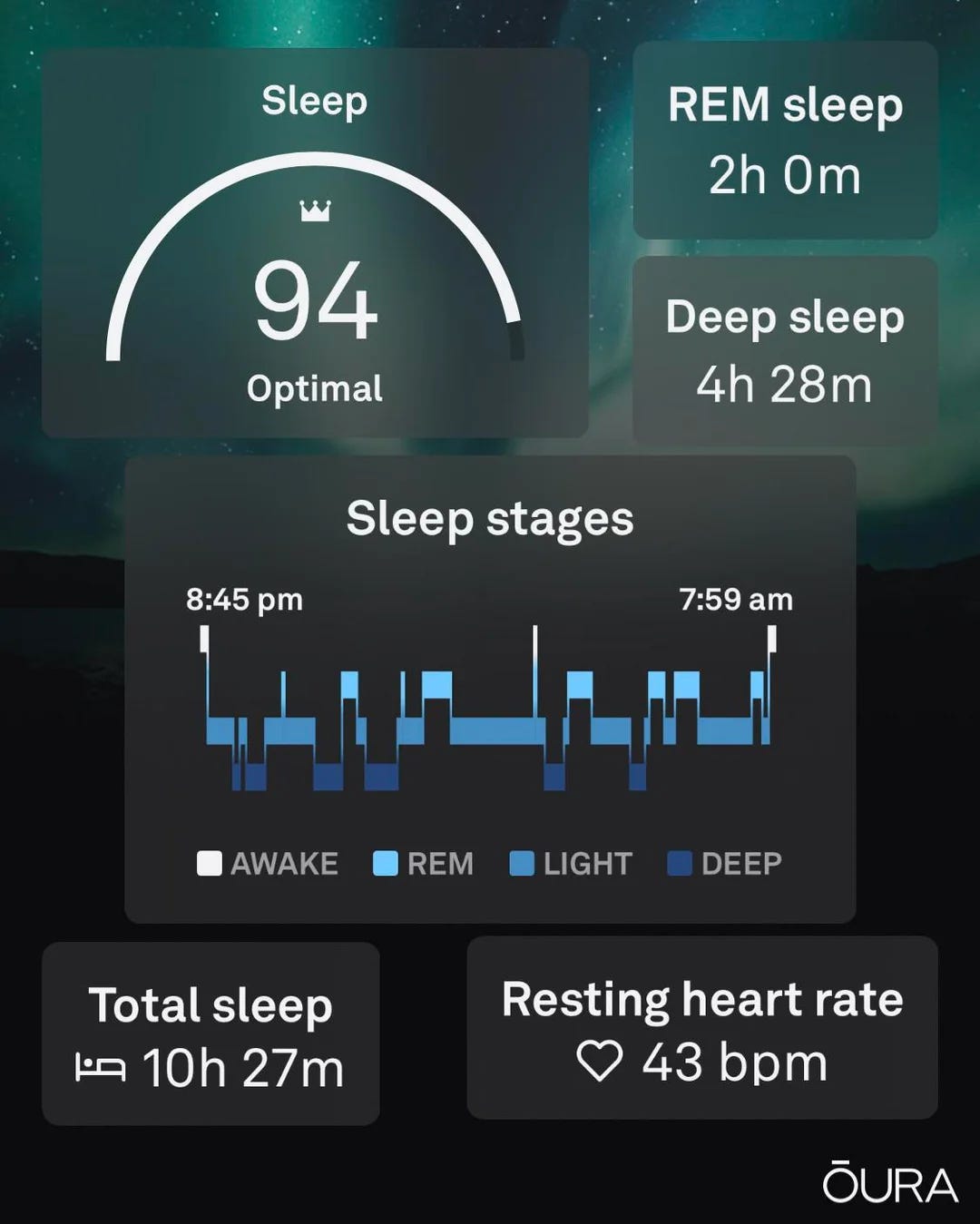

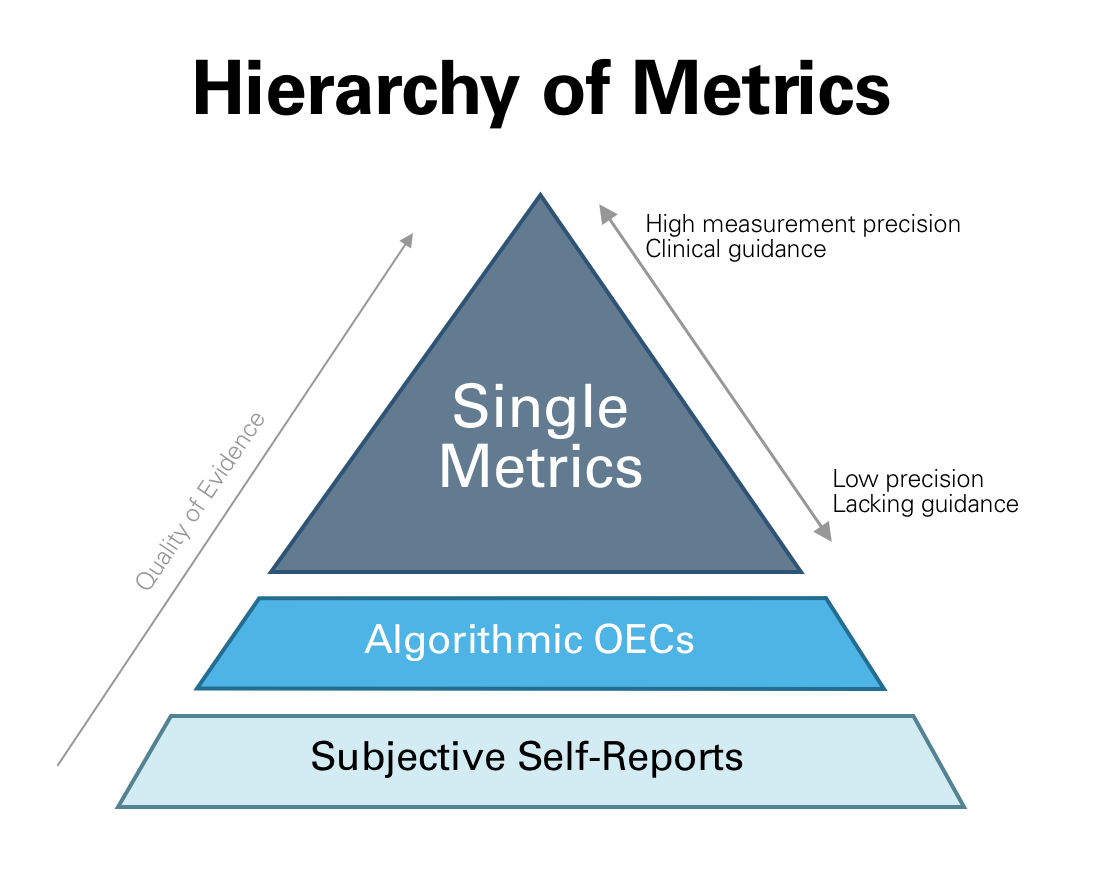

Beyond examples like these of single metrics that we can measure with varying degrees of reliability, some other areas of health that I care about present me with the opportunity to measure via what I call Algorithmic OECs - single numbers calculated by proprietary black boxes, meant to be a holistic representation of many metrics’ signals. (OEC for Overall Evaluation Criterion, to borrow Ronny Kohavi’s coined term for a metric-of-metrics used for decision evaluation.) Examples of these Algorithmic OECs could include the Oura ring’s “Sleep Score”14 which aims to summarize your sleep quality each night, or the Muse EEG headband’s “Alpha Peak” metric15 which aims to measure brain health. Of course, the black box nature makes these metrics harder to trust, and we should also assume that they’re noisier given the underlying variance of their component metrics.

I have two more designations for metrics that fill out each extreme of the hierarchy. At the top (most rigorous) would be metrics that are precisely-measurable16 and have established clinical guidelines that define what “good” looks like (although “good” can be a pretty low bar for some metrics, and I often opt-in to expert guidance that sets more aggressive targets). We can go back to the LDL cholesterol example here: it is very easy to order a lipid panel from a lab like Quest Diagnostics17 and get accurate results. The report you get back will tell you that the “desirable range” for LDL-C is “<100 mg/dL for primary prevention; or <70 mg/dL for patients with CHD or diabetic patients with > or = 2 CHD risk factors.” From there it’s up to you if you’d like to follow more aggressive guidance.

On the least-rigorous end of the hierarchy, though still a useful or necessary tool in some cases, is subjective self-assessments. I could rate myself on a scale out of 10 each day on how easy I found it to focus on my tasks, or how refreshed I felt after waking up in the morning.

All together, we end up with a metrics hierarchy that looks like this:

Getting to Work

OK, so we have a bit of a map to understand what we should measure and how we should assess our measurements. Obviously this is only part of the picture - actually getting to work and starting to apply interventions will require some planning, and that planning will need to take into account things like how tight of a feedback loop we can create. Some interventions have more acute effects, i.e. work quicker than others. Some metrics we can measure daily (bodyweight), some would be a real pain (or cost) to measure more frequently than monthly (DEXA scans).

But this at least covers Orientation for what we’ll explore as we get further in to The Personal Lab. I look forward to bringing you dispatches from my own health experiments, but even more importantly, more ideas on how you could run your own takes on this approach.

To be clear: I don’t think any of this nonsense is necessary to be healthy. But it’s a fun thought experiment, and it turns out that’s what I needed to get me off my couch and working.

If all this is a topic that interests you, do me a favor and leave a quick comment - or even better, share this post on LinkedIn. I have no idea what the appetite is for this far of a diversion from my normal focus on business applications of experimentation, so I’ll (ironically) be reliant on some light “user testing” here to get your feedback. Plus, this newsletter is still a little baby trying to chase those elusive first 100 subscribers…

Erratum:

Some brief corrections on my previous essay, “Klarna's AI Experiment Is Going Fine, Actually”:

I mistakenly suggested that Clayton Christensen had once pegged the failure rate of new products introduced to market at 95%. Turns out this is a complete fabrication of a quote, and quite a popular one at that. According to this paper, Christensen himself denies ever saying that and thinks the true failure rate is likely lower. There is certainly no actual primary source attribution for the quote, regardless.

A reader on LinkedIn pointed out a confusing partial quotation from the IBM 2025 CEO Survey - the precise stat in question is that “only 25% of AI initiatives have delivered expected ROI”, and also worth noting that CEOs were asked about AI initiatives over a period of the last three years - certainly notable given the current pace of innovation!

I personally have consumed a slightly-worrying amount of Johnson’s content over the last 2 years or so, including his hour-long streams of consciousness on YouTube livestreams and officially-sanctioned Netflix propaganda “documentary”.

I am almost certainly underselling how problematic Johnson is. An increasing number of people are pointing out a litany of false claims and coverups that Johnson has made, and Johnson is not just un-scientific, he is arguably anti-science: he routinely blocks actual longevity docs and researchers when called upon to collaborate and contribute to actual scientific research.

I am struck, in writing this, by how much this shotgun approach feels like the ZIRP-era tech mantra of “Move Fast and Break Things” misapplied to medicine. This also makes me 2 for 2 on mentioning the quote negatively in my newsletter.

Ironically, Nassim Taleb has other bones to pick with Johnson too.

It does not take a particularly astute armchair psychologist to draw a dotted line between Johnson’s quest to “start a new religion” (cult) around his “Don’t Die” philosophy and his traumatic experiences as an ex-Mormon who left the church and was summarily excommunicated and divorced.

https://jamanetwork.com/journals/jamainternalmedicine/fullarticle/217054

https://academic.oup.com/jcem/article-abstract/108/9/2424/7079754?redirectedFrom=fulltext

https://pmc.ncbi.nlm.nih.gov/articles/PMC5686928/

A topic for another day. If you’re curious what the science says about recomposition, here’s another stellar Macrofactor blog article - this one from Eric Trexler

https://www.nature.com/articles/s41392-021-00831-w

https://pmc.ncbi.nlm.nih.gov/articles/PMC8153354/

mine: first thing in the morning, nude

https://www.hopkinsmedicine.org/health/treatment-tests-and-therapies/bone-densitometry

https://ouraring.com/blog/sleep-score/

https://choosemuse.com/blogs/news/muse-alpha-peak

when I say “closely-measurable”, I mean “realistically” for an average Ryan like me. I.E. there are certainly more exact approaches to body composition, as an example (usually taking a combination of DEXA scans with 3+ other approaches), but these are not generally accessible to the public.

Blood tests, on the other hand, are readily available for anyone - you can even self-order them in almost every US state using a site like jasonhealth.com. A standard lipid panel in my home state of MA, with all fees and taxes, is only $28.

For personal experimentation of the "enabling/disabling [an Eight Sleep mattress] over a few phases before analyzing sleep data collected by my Oura ring" variety, I suggest looking into the iOS app Reflect https://ntl.ai/reflect/

Besides switchback-style experiments, it supports day-level randomization, which is necessary if you're running more than one such experiment at a time. And it's tolerant of less-than-full compliance (it will tell you whether today should be T or C for each intervention, but allows you to log the treatment status even when you don't comply). I'm afraid that all such experiments are hopelessly underpowered and so have given up on the approach myself. But the tool is decently well-designed for it.